RL Policy & Tron1

Demo available here. Note: registration required.

Overview

The Tron1 robot is a multi-modal biped robot that can be used for Humanoid RL Research. Much of the software is open source, and can be found here.

In this project, we evaluate Tron1 using two reinforcement learning policies under two complementary conditions:

- Movement tests, which assess task execution under explicit motion commands

- Idle drift tests, which assess passive stability when no motion command is issued

Together, these experiments allow us to study both active locomotion performance and intrinsic stability characteristics of the policies.

Policies under test

We compare two reinforcement learning policies that share the same high-level objective (bipedal locomotion) but differ in training environment and design assumptions:

-

isaacgym

A policy trained using NVIDIA Isaac Gym, emphasizing fast, large-scale simulation and efficient optimization. These policies typically prioritize robust execution of commanded motions under simplified or tightly controlled dynamics. -

isaaclab

A policy trained using Isaac Lab, emphasizing modularity, richer task abstractions, and closer alignment with downstream robotics workflows. This often introduces additional internal structure and constraints in the policy.

Implementation details and training setups are available at:

Movement test: policy comparison under commanded motion

We first run a movement test where the robot is asked to move forward and rotate relative to its starting pose. This evaluates how well each policy executes a simple but non-trivial locomotion task.

The test itself is straightforward:

- Move forward 5 meters

- Rotate 150 degrees

- Complete the task within a fixed timeout

Parameterizing the test

Using the artefacts.yaml file, the movement test is configured as follows:

policy_test:

type: test

runtime:

framework: ros2:jazzy

simulator: gazebo:harmonic

scenarios:

defaults:

pytest_file: test/art_test_move.py

output_dirs: ["test_report/latest/", "output"]

settings:

- name: move_face_with_different_policies

params:

rl_type: ["isaacgym", "isaaclab"]

move_face:

- {name: "dyaw_150+fw_5m", forward_m: 5.0, dyaw_deg: 150, hold: false, timeout_s: 35}

Key points from above:

- The test is conducted using

pytest(and sopytest_filepoints to our test file). - Artefacts will look in the two folders described in

output_dirsfor uploads to the dashboard - We have two sets of parameters:

rl_type: two parameters: “isaacgym” and “isaaclab”move_face: one parameter set specifying forward distance (5m), rotation angle (150 degrees), and timeout (35 seconds)

The test will run twice, once using the isaacgym policy, and again using the isaaclab policy. Both tests will use the move_face parameter to determine how far, and how much rotation the robot should do.

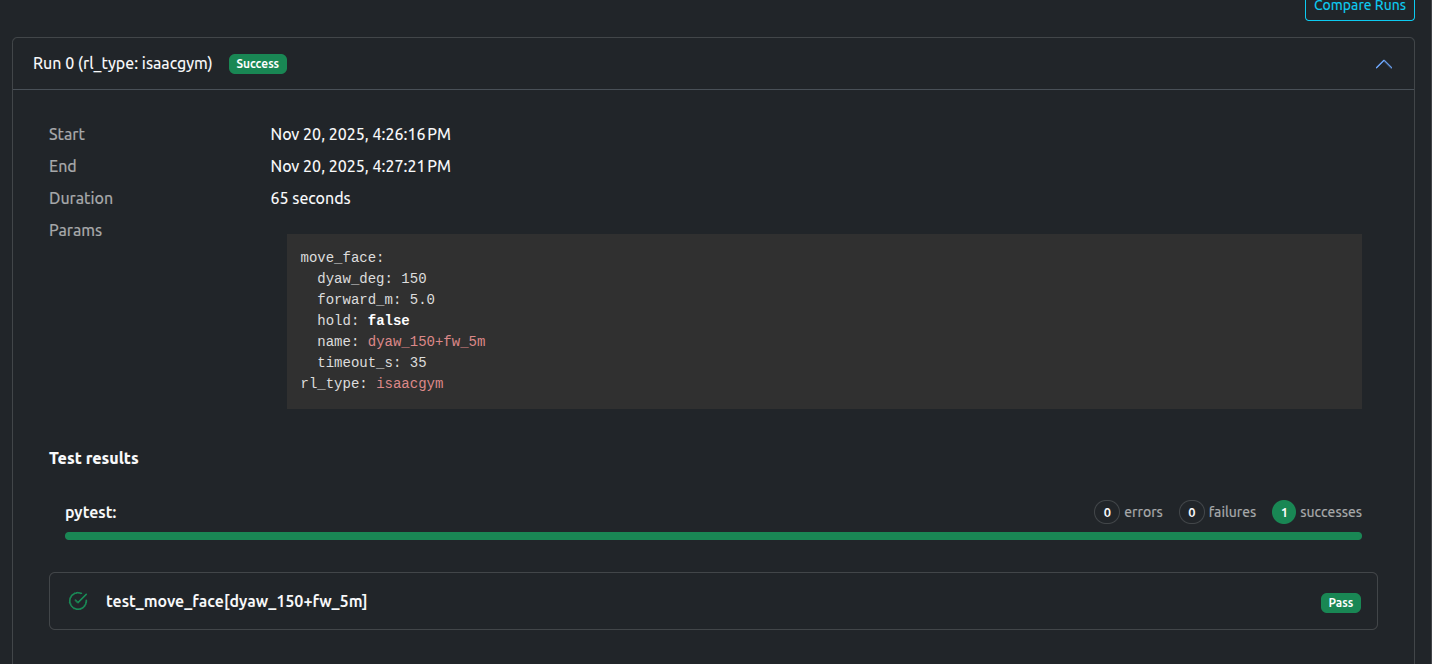

Results: isaacgym

When using the isaacgym policy, we can see (from a birdseye recording) the robot successfuly rotating, and then moving forwards:

The dashboard notes the test as a success:

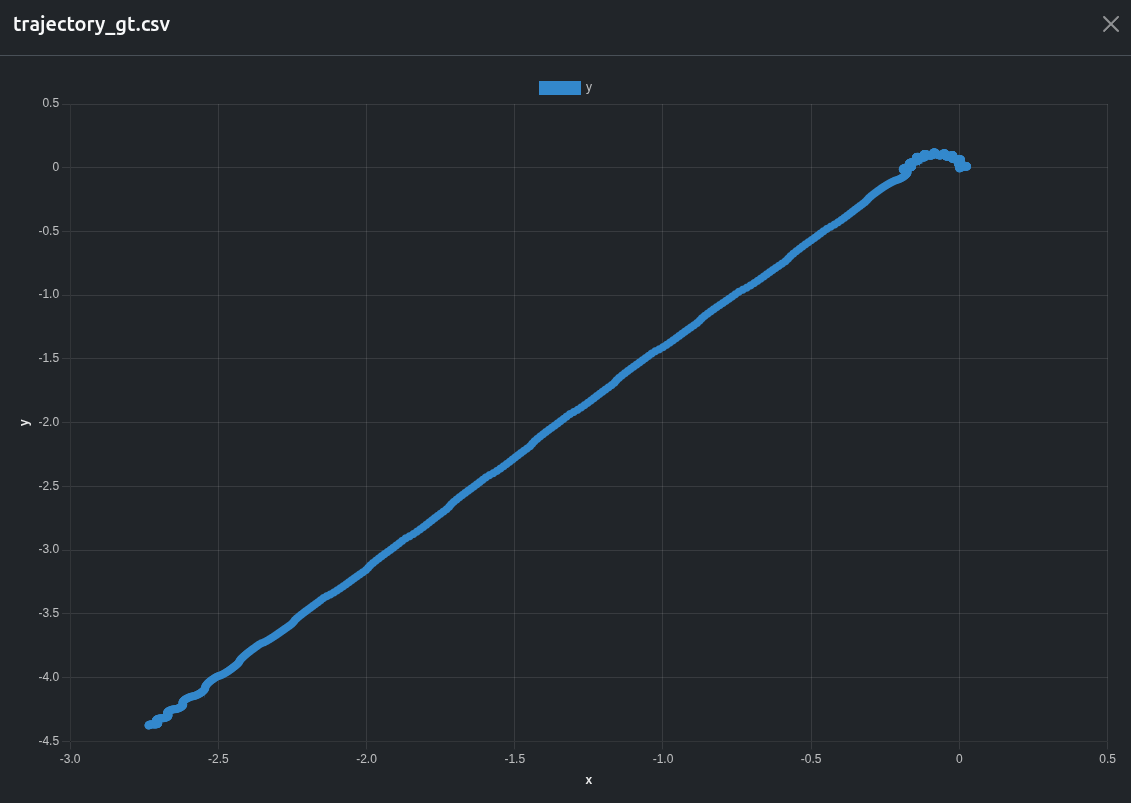

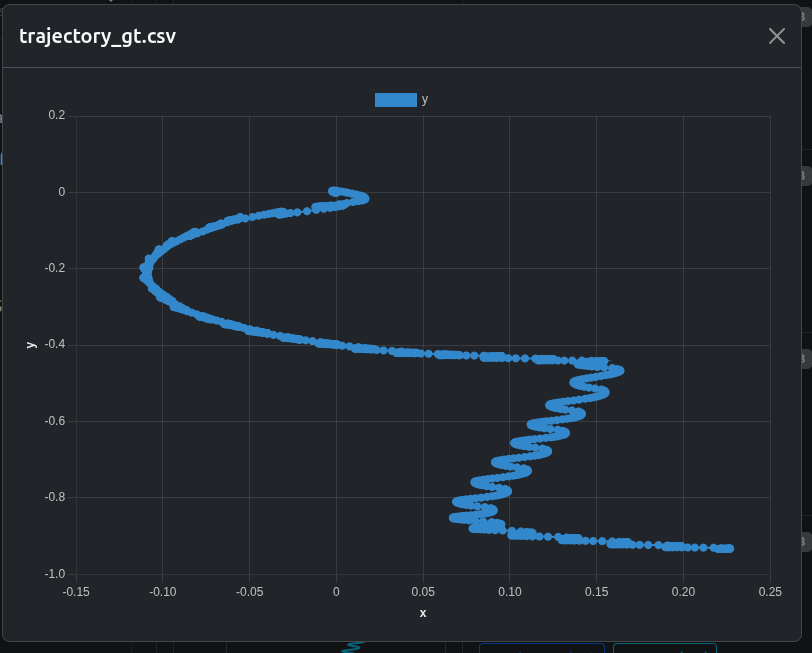

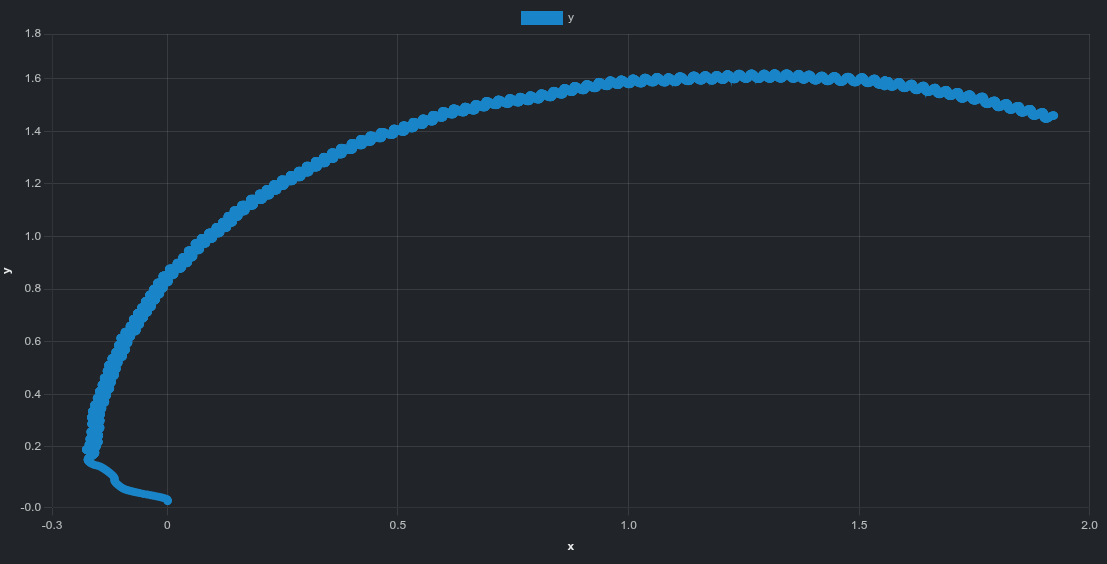

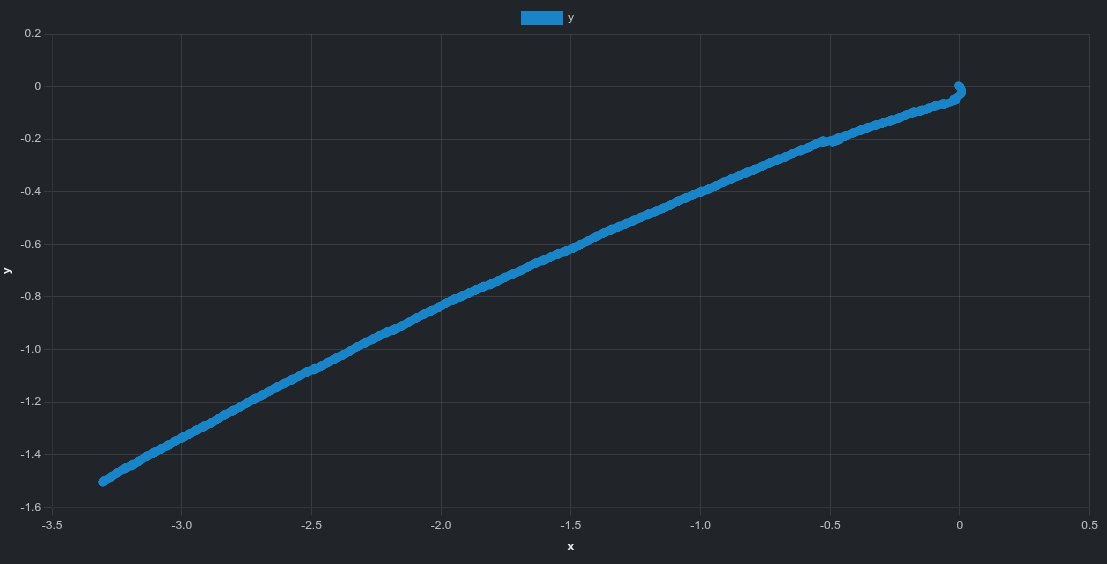

And a csv we created during the test plotting the ground truth movement is automatically converted to an easy to read chart by the dashboard.

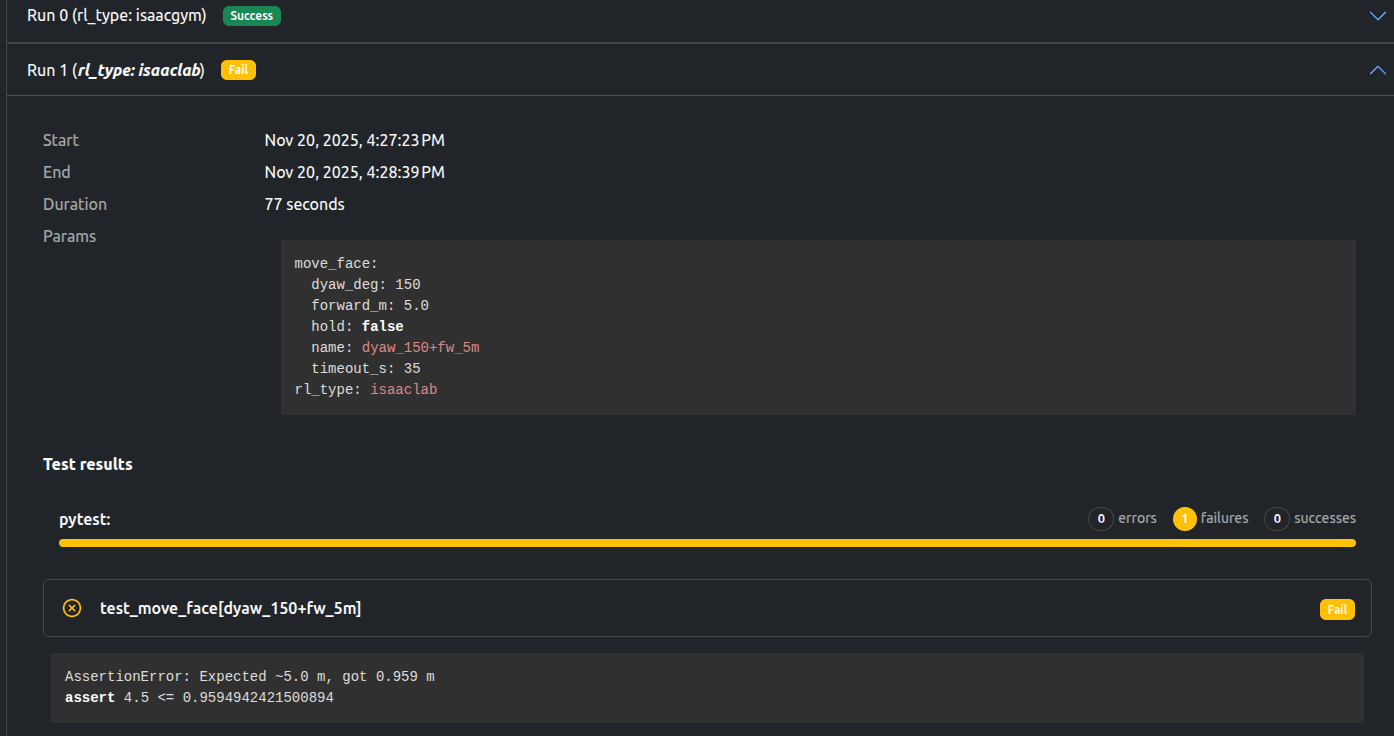

Results: isaaclab

With the isaaclab policy, we see there is still work to be done. The dashboard notes the test as a fail (and shows us the failing assertion), and the birdseye video shows the robot failing to setout its goal.

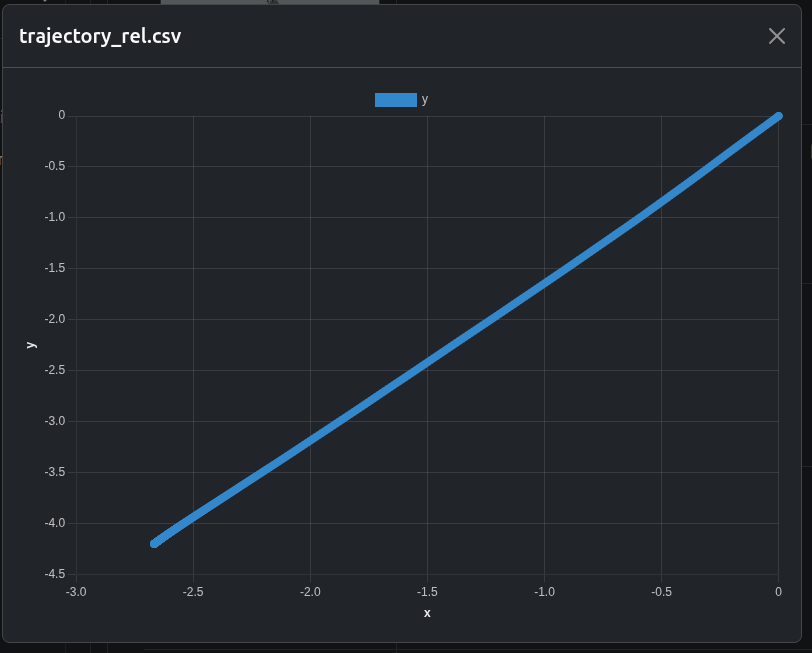

We have a csv (automatically converted to a chart) of estimated trajectory, (i.e what the robot thinks it has done), which we can see is widely different to the ground truth:

Idle drift comparison (stationary robot)

The movement test evaluates task execution under command. To complement this, we perform an idle drift test, where the robot receives no motion command at all.

After initializing the robot in a neutral standing pose, no velocity, pose, or locomotion commands are issued. The policy continues running normally, and any observed motion is therefore uncommanded.

This test isolates passive stability behavior, independent of task execution.

Test setup and durations

Idle drift is evaluated at two time scales:

- 10 seconds, capturing immediate transients and short-term controller bias

- 60 seconds, capturing slow accumulation effects such as yaw creep or gradual planar drift

Using both durations allows us to distinguish between short-term stability and long-term equilibrium behavior.

Parameterization

policy_drift:

type: test

runtime:

framework: ros2:jazzy

simulator: gazebo:harmonic

scenarios:

defaults:

output_dirs: ["test_report/latest/", "output"]

metrics: "output/metrics.json"

pytest_file: test/art_test_drift.py

settings:

- name: idle_drift_compare_policies

params:

rl_type: ["isaacgym", "isaaclab"]

durations_s: [10, 60]

Key points from above:

- The test is executed using

pytest - Each run corresponds to one

(policy, duration)pair - Metrics are written to

metrics.jsonand automatically displayed in the Artefacts dashboard

Visual results

For each duration, the two policies are compared side-by-side using birdseye recordings.

10 second idle test

| isaacgym | isaaclab |

|---|---|

|

|

60 second idle test

| isaacgym | isaaclab |

|---|---|

|

|

Metrics and evaluation

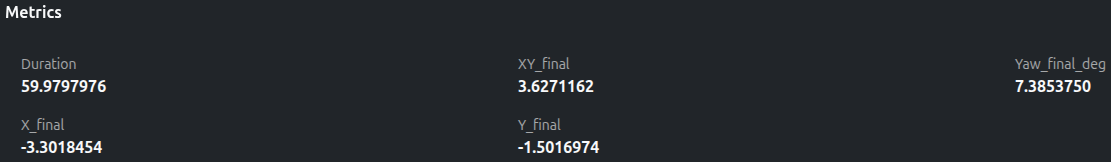

For each idle drift run, the following ground truth metrics are reported:

- Duration – actual elapsed runtime of the test

- X_final, Y_final – final planar displacement relative to the start

- XY_final – total planar drift magnitude

- Yaw_final_deg – accumulated yaw drift

An example metrics panel from the dashboard is shown below:

These metrics provide a compact quantitative summary that complements the visual observations.

Trajectory plots (60 second idle test)

In addition to scalar metrics, the dashboard provides interactive planar trajectory plots derived from ground truth pose data.

Global trajectory view

| isaacgym | isaaclab |

|---|---|

|

|

isaacgymexhibits a pronounced curved drift trajectory.isaaclabshows a more linear overall drift direction.

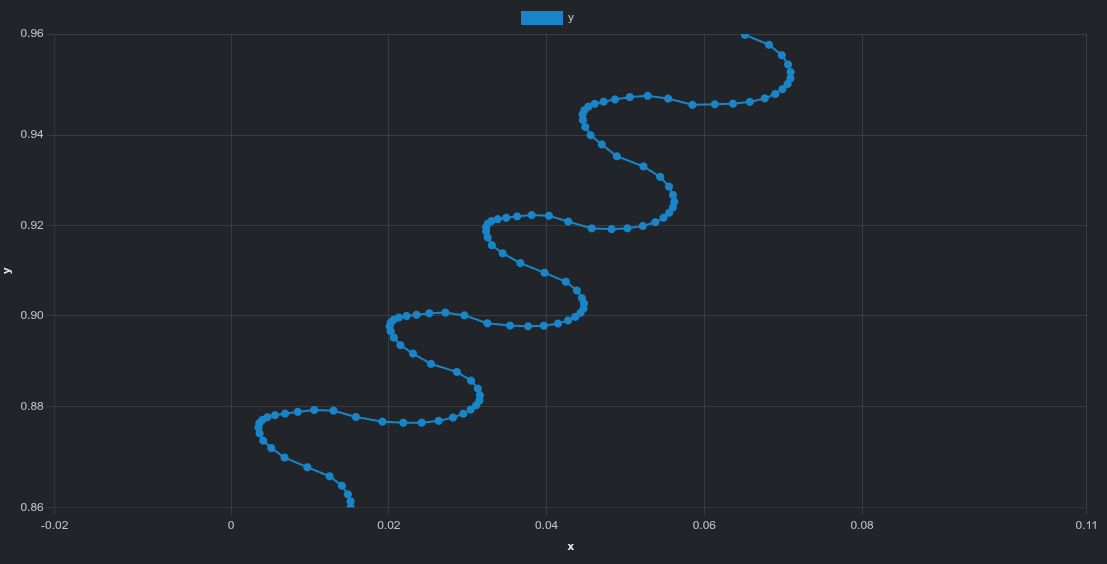

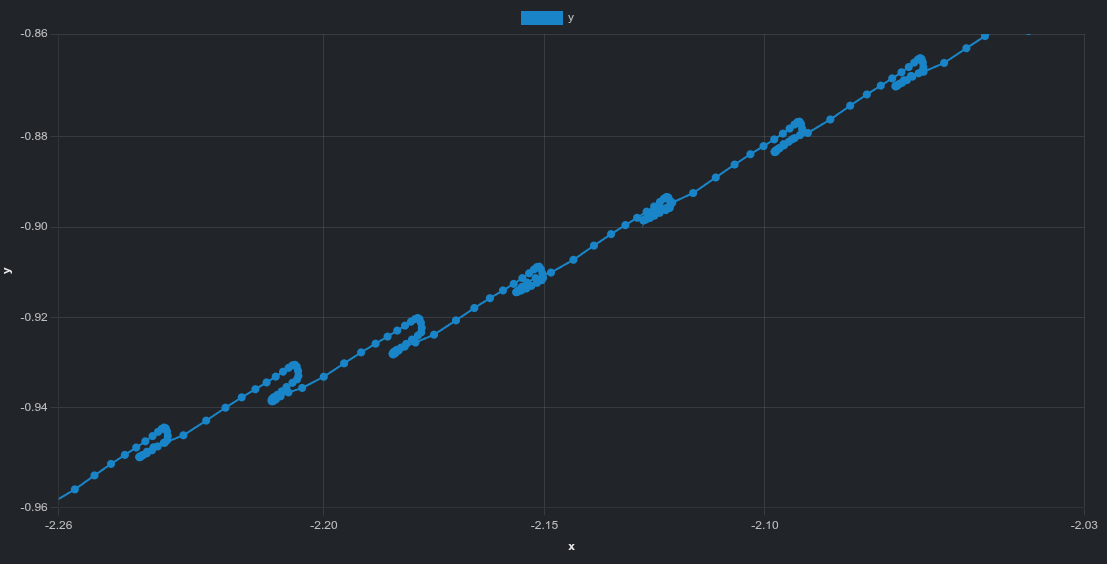

Zoomed-in trajectory view

| isaacgym | isaaclab |

|---|---|

|

|

The zoomed-in view reveals fine-scale structure:

- oscillatory behavior for

isaacgym, - smaller but irregular deviations for

isaaclab.

These patterns are difficult to see in videos alone but become clear when inspecting trajectory data directly.

What we learn from these tests

The movement and idle drift experiments are complementary:

- The movement test evaluates task execution under explicit command

- The idle drift test evaluates passive stability in the absence of command

By combining both, we obtain a clearer picture of policy behavior under both active and idle conditions, helping distinguish between execution errors and intrinsic stability characteristics.

Data available after the tests

For both movement and idle drift experiments, Artefacts provides access to:

- ROSbag recordings

- Video of the active robot in Gazebo, both birdseye and first person,

- Stdout and stderr logs

- Debug logs

- CSV of the trajectory (estimated) automatically displayed as a graph in the dashboard

- CSV of the trajectory (ground truth) automatically displayed as a graph in the dashboard

- Metrics summaries for quantitative comparison

Artefacts Toolkit Helpers

For this project, we used the following helpers from the Artefacts Toolkit:

get_artefacts_params: used to select the RL policy and test parametersextract_video: used to generate videos from recorded rosbags